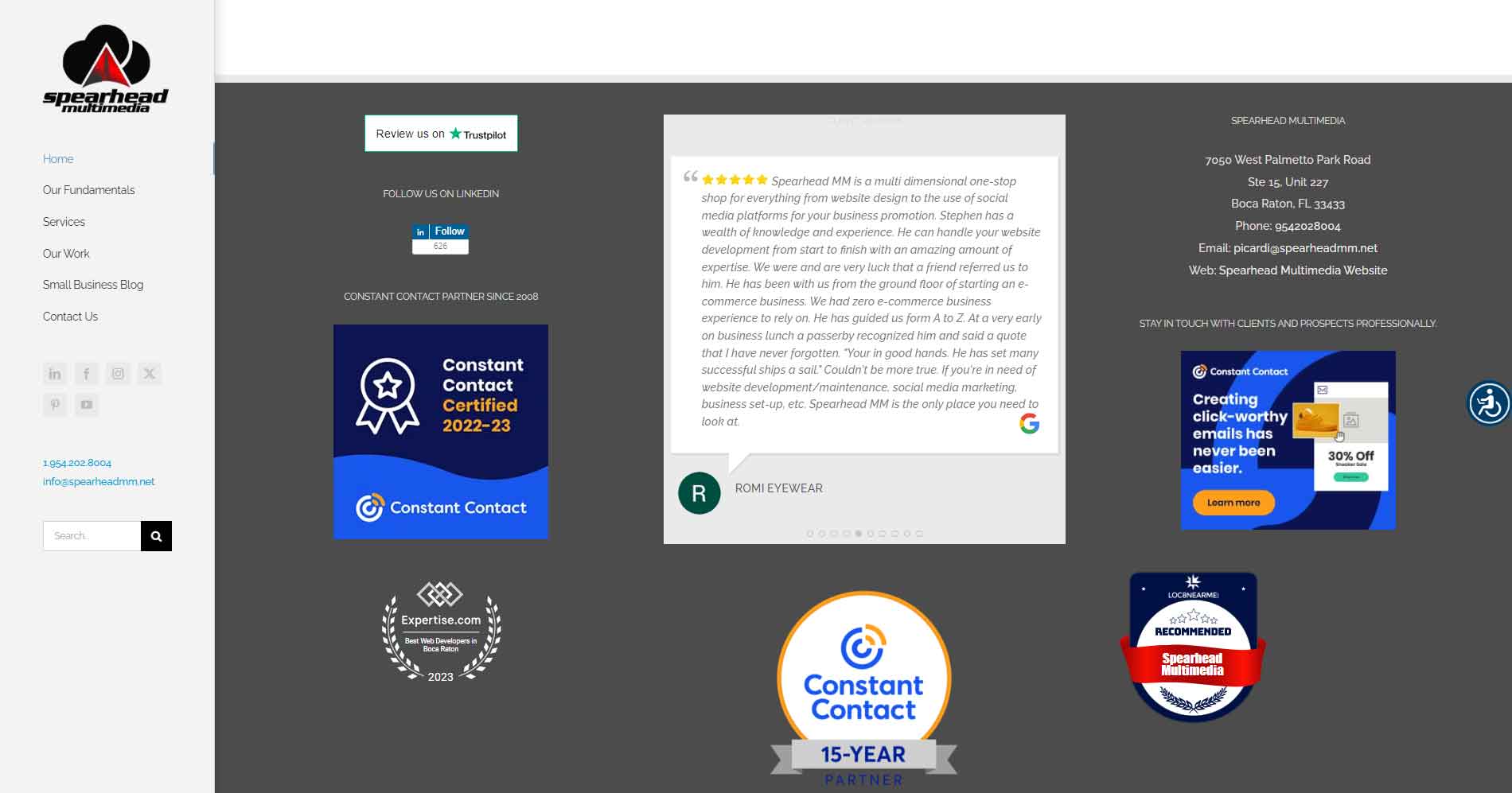

Welcome to the Spearhead multimedia Small Business Tips and Tricks Blog page.

Here you will find articles to help you grow your business and increase your customer base. From simple mistakes that can be costly to you to tips about social media, bulk emailing, storytelling and content creation are some of the many things here to help your business.

We hope you enjoy our small business tips for success in your business and that it helps your marketing ROI. We can provide your website and marketing plan

significantly. Reach out to us today to see what we can do to help your bottom line.

How to Remove Negative Reviews Online and Protect Your Online Reputation

In the digital age, maintaining a positive online reputation is crucial for businesses, as customer reviews on the internet heavily influence brand perception and purchasing decisions. This article [...]

Mastering Your Marketing Automation Strategy: A Comprehensive Guide

As a busy small business owner/marketing professional, you have a lengthy to-do list. Crafting engaging content, launching email campaigns, analyzing data, and other routine tasks can quickly pack [...]

SEO Combination You Need to Win Google’s Algorithm

For an online presence that delivers results and high rankings long term, create a strategy that focuses on an SEO combination of best practices and E-E-A-T guidelines — [...]

5 Powerful Strategies to Help You Website Rank

Do you need help to rank well on Google due to new algorithm updates? Discover five SEO strategies that work now. Search engine optimization (SEO) [...]

Build Long-Term Customer Loyalty With These 3 Relationship Marketing Strategies

Customer relationships shouldn't end after a sale. In fact, the best relationships never end. Key Takeaways Resonating with customers and building long-term loyalty are crucial to [...]

Using this 1 magic word more often can make you 50% more influential, says Harvard study

Sometimes, it takes a single word — like “because” — to change someone’s mind. That’s according to Jonah Berger, a marketing professor at the Wharton School of [...]

9 SEO Myths to Say Goodbye to This Year

Here are the most common SEO myths that may be hurting your rankings. Although most entrepreneurs understand the importance of search engine optimization (SEO), the online [...]

Using “Super Bowl” and NFL Terminology in Marketing

Use of NFL Terminology in Marketing Without the express written permission from the NFL and/or the teams involved, you may not use the following, or [...]

Google Ads for lead gen: 9 tips to scale low-spending campaigns

Looking to elevate your Google Ads lead gen efforts? Here are nine levers that can boost your PPC campaigns toward significant growth. Are you ready to [...]

10 Customer Retention Strategies For Essential Growth in 2024

"Make new friends, but keep the old. One is silver, and the other’s gold." This classic adage doesn’t just apply to personal friendships but also to business-customer [...]

10 Essential Tips To Completely Optimize Your Google Business Profile

Use this guide to optimize your Google Business Profile for better search visibility on relevant queries by motivated customers near you. Google Business Profile is a [...]

January Holidays and Highly Effective Newsletter Ideas – 2024

A whole new year. And it’s time to decide what you will do with it. With days like Motivation and Inspiration Day (Jan. 2nd) and National CanDo Day (Jan. 4th), [...]

Business Awards Get Your Business Noticed.

Knowing how to go after important recognition awards and then leverage them can have a long-term impact on your business. Companies all across the country are [...]

5 SEO Tips to Grow Your Small Business

The five best ways to leverage SEO to grow your small business. Many small businesses often struggle to attract quality leads to their website. That's because [...]

4 Ideas for Your Holiday Marketing Campaign

Holiday Marketing Campaign Ideas for Your Business The holidays are a great time to boost your business and connect with your customers. Here are some campaign ideas [...]

How to Maximize Your Email Results This Holiday Season

If there’s one channel you’re wise to focus on this holiday season, it’s email. Its efficiency is undeniable: email offers a potential return on investment (ROI) of $42 [...]

6 Ways to Ace Social Media Branding for Your Startup

Social media offers a level playing field for startups to build their brand presence, but the high competition necessitates a strategic and streamlined social media branding approach. [...]

Transform Your Small Business with Generative AI

Generative AI presents a wealth of opportunities for small enterprises across diverse industries. The article discusses how small businesses can use generative AI to improve their [...]

If something on this list is what you are looking for. You found the right place.

- business tips of the day

- online small business tips

- top 10 small business ideas

- small business list

- small business ideas at home

- business tip of the week

- small business owners

- small business ideas

- small business management

- successful small business

- start a small business

- businesses to start

- create a business

- small business most successful

- starting a small business